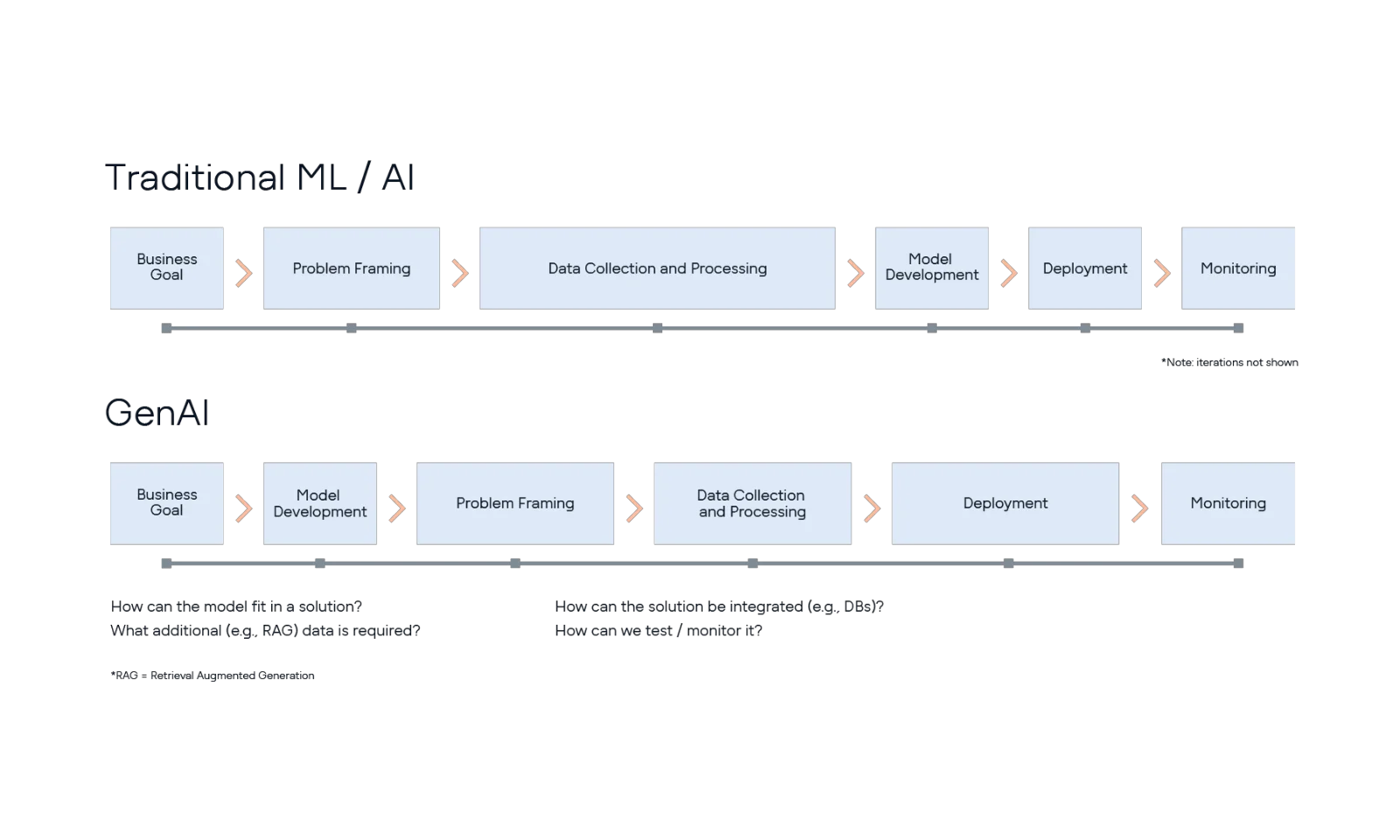

Throughout this series of articles, I have coflated the different flavours of AI (Gen AI and ‘old’ AI, including machine learning/ML, natural language processing/NLP, etc.), since the AI components of a solution generally all fit a similar pattern, specifically, that of a defined input from the business (e.g., structured data or prompt text) resulting in a particular output (e.g., predicted numeric data or generated text), which flows through the rest of the solution and ultimately into the business.

However, at this point, it is worth taking a brief interlude to highlight the main difference between Gen AI and other varieties, in particular how the model is developed, since it has major implications for the practical stages of developing and implementing an AI solution.

Traditional AI models, such as those developed in ML projects, take a set of training data (often sourced for this particular purpose) and combine it with an algorithm selected for this same purpose, which results in a trained model specific to the data it has been trained on and the application it has been developed for. Going back to the example of identifying customer churn in the previous article, here the data would be specific to that organisation, with the resulting model tailored to that particular use case.

This description glosses over the details of the process of developing an ML model with which many data scientists and ML engineers are all too familiar. For example, one of the first hurdles that they face will be to get hold of the data required for training a model which can often be a challenge in many organisations. Subsequent steps require exploration of the data, identifying appropriate algorithms and approaches and then training and testing the various iterations of the model. All of this can be time-consuming and tedious, often viewed as an art as well as a science, and - worst of all - the data scientist may discover that there is no signal in the data and that it is impossible to build a model to predict the phenomenon that they were expecting to be able to predict!

However, one of the advantages of traditional AI approaches, such as ML, is that there are (relatively) mature and well-defined processes for developing and testing these models and then deploying them into production (for example this paper from 2014 was something of a ‘call to arms’ for many practitioners in this area). This is not to say that it is without challenges, and there are plenty of uncertainties (certainly relative to regular software projects), but once the model has been properly tested and approved (i.e. it ‘works’), it can then be deployed, following the various best practice recommendations from MLOps.

On the other hand, generative AI approaches start with a general, pre-trained model (large language model, or more generically termed ‘foundation model’), which is then customised to the particular application by means of supplying different information in the prompt (e.g., the wording of the prompt, specific organisational information), in order to manipulate the output of the foundation model in the desired way.

Again, this high-level description misses out much of the detail. For example, the phase of starting with a foundation model will likely involve iterating over different pre-trained models as well as prompts (and contextual data) to get a sense of whether the idea might be possible. Then there might be a phase of problem framing to map the solution back to the business problem followed by the data collection and processing. Essentially, it is easier to rapidly prototype a solution, since the need for training data has been removed, but the problem framing to map the solution to the business may be less easily understood, may need to be bespoke to that particular solution and therefore take longer. In a similar way, the testing of model/solution performance may again be less well understood (unlike the standard metrics used in ML, such as precision or recall), and therefore will also take longer; the same too for deployment and monitoring, again because of the more bespoke nature of the solution.

.webp)